🔍 Is Now the Time to Buy AMD?

New chips, growing momentum and a massive AI market. Is AMD a smart buy now?

Last week, AMD held its Advancing AI event, where it shared its vision for AI and explained how it plans to compete in the fast-growing AI market. The event gave a clearer view of AMD’s strategy, its ambitions, and the role it wants to play in shaping AI infrastructure.

In this article, we highlight the most important announcements, explore if AMD is now in a position to seriously challenge Nvidia, and take a closer look at the stock’s current valuation to answer the big question: is AMD a smart buy right now?

We highlighted AMD as one of our “Best Bulls” back in May. Missed it? You can read it here: Best Bulls – May 2025.

New chips, big ambitions

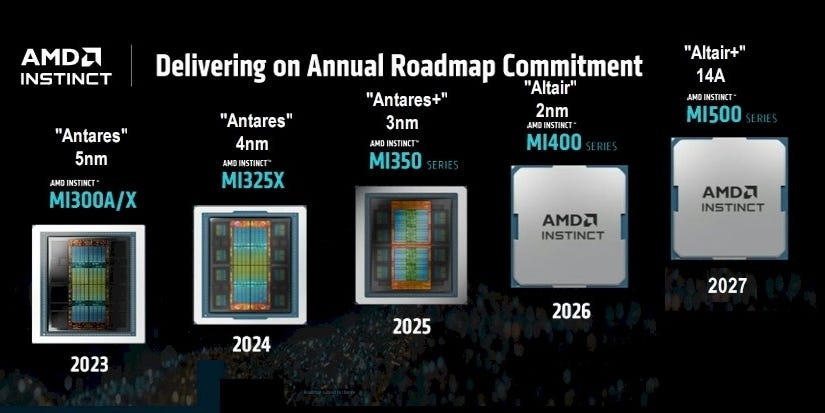

One of the biggest highlights from AMD’s Advancing AI event was the launch of the Instinct MI350 and MI355X — the company’s latest AI chips and the next step after the MI300 series. According to AMD, these new GPUs deliver up to 4x better performance than their predecessors, putting them in direct competition with Nvidia’s Blackwell (B200).

The MI355X stands out even more. It doesn’t just match Blackwell on raw performance — it also comes with 60% more memory. That’s a big deal for training large AI models, which need a lot of memory capacity and speed to run efficiently.

And AMD isn’t stopping there. It also shared what’s coming next: the MI400 series, expected in 2026, is designed to compete with Nvidia’s future Rubin platform. These chips are set to deliver 40 PFLOPS of compute power, along with 432 GB of memory and 19.6 TB/s of bandwidth, specs that suggest AMD is closing the gap in high-end AI hardware.

Looking further ahead, AMD gave a first teaser of the MI500 series, coming in 2027. This generation will take on Nvidia’s Rubin Ultra line. While details are still limited, it’s clear AMD is planning to stay on a fast upgrade cycle, with a new wave of chips coming every year.

Source: AMD Advancing AI 2025 Keynote

Building the full system

AMD also introduced Helios — a new rack system for running AI at a much larger scale. Starting in 2026 with the MI400 chips, this double-wide rack offers more space for GPUs, memory, and networking hardware.

Helios is built with open standards like UALink and UltraEthernet, which were developed in collaboration with companies like Microsoft, Meta, and Broadcom. This gives cloud providers more flexibility and avoids getting locked into just one supplier — something many customers prefer.

The racks will combine AMD’s latest Venice CPUs, Vulcano 800G networking cards, and next-gen HBM4 memory, with as much as 440 GB per GPU. AMD says the system can deliver up to 10× more performance than its current MI355X setups. Compared to Nvidia’s upcoming Vera Rubin platform, Helios is expected to offer up to 50% more memory bandwidth and capacity.

With Helios, AMD isn’t just making faster chips, it’s offering a full solution to power the next generation of AI data centers.

Source: AMD Advancing AI 2025 Keynote

Don’t Miss This — 26% Off for Life! 🔥

Unlock full access to everything we offer ✨ and enjoy a lifetime 26% discount on your annual plan. Upgrade today!

Inference: AMD’s biggest opportunity

Training gets most of the attention — but inference is where the real business begins. Every time an AI model generates a result, like a chatbot response or a search suggestion, it uses compute power. That costs money. As CEO Lisa Su put it: “Inference is the tax on intelligence.”

This is exactly where AMD wants to compete. The MI355 is designed to cut that cost. AMD says it delivers 40% more tokens per dollar compared to earlier models, thanks to higher performance and better energy efficiency. As AI becomes more widely used, those kinds of savings can make a big difference at scale.

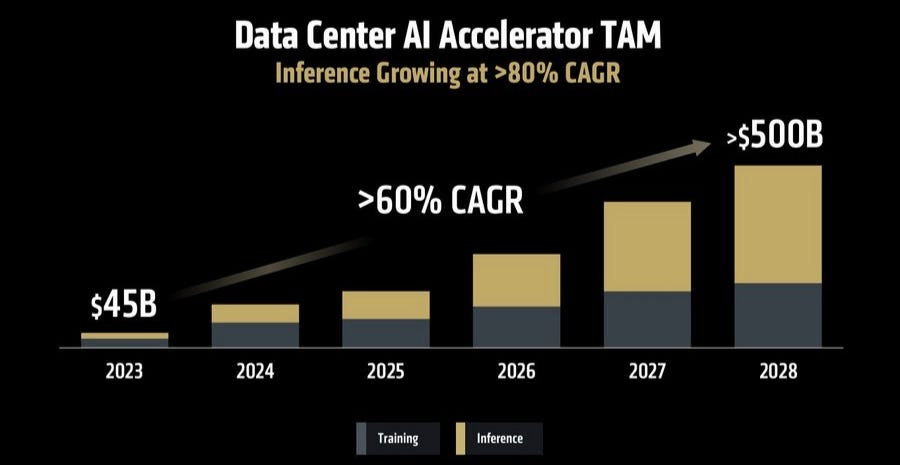

AMD expects inference to be the fastest-growing part of the AI market — with a projected 80% CAGR through 2028.

And the overall opportunity is huge. The market for AI data center chips is expected to exceed $500 billion by 2028. AMD made around $5 billion in AI revenue in 2024. If it captures just 8–12% market share, that would mean $40–60 billion in annual revenue by that year — a massive step-up from today’s levels.

Source: AMD Advancing AI 2025 Keynote

Software and ecosystem catching up

For years, AMD’s software stack was a weak spot — but that’s changing fast. The latest ROCm 7 update adds support for FP4/FP6 precision, distributed workloads, Windows environments, and Radeon GPUs. It now works with top AI libraries like Flash Attention, VLLM, PyTorch, and Hugging Face. Performance has improved significantly, with up to 3× faster inference in recent benchmarks.

Another important step is AMD’s acquisition of Xilinx. Its programmable FPGAs are a good fit for edge AI, telecom, and defense — high-margin, high-reliability markets that help expand AMD’s presence beyond the core data center segment.

Data center growth is here

AMD’s progress is already showing up in the numbers. Since mid-2023, its data center revenue has tripled. In Q1 2025, revenue in this segment rose 56% year-over-year, thanks to strong demand for the MI300 and early shipments of the new MI350X chips.

Now that we’ve covered the key updates — from software progress to growing momentum in data centers — it’s time for the big question: Is AMD a smart buy at today’s price?

To answer that, we’ll take a closer look at the valuation, what the market is pricing in, and how AMD compares to Nvidia.

👇 Upgrade now to unlock full access ✨ — including this article, our monthly stock picks, our portfolios, and much more!